Host a Jupyter Notebook as an API

Often times data scientists have Jupyter notebooks that they want to run in a recurring manner. Often this is a Jupyter notebook that has an analysis that needs to frequently be rerun with newer data. Another situation where having a notebook rerun may be useful is if the notebook trains a machine learning model and the model needs to be retrained on more recent data points. There are a number of ways that such a notebook could be rerun, depending on the use case:

- A human being can manually press a button to cause the notebook to run,

- The notebook can be run on a fixed schedule, or

- An API can be set up so that when the API receives a request the notebook is then run.

At first glance that list seems like it’s increasing in technical complexity–setting up a whole API endpoint is certainly more complicated than opening a notebook and hitting “run all cells.” In practice, all three of these can be done very easily with Saturn Cloud jobs. With Saturn Cloud jobs you can create data science pipelines with very little effort–avoiding the work of having to set up your own entire web APIs, cron schedulers, and other distractions from data science work.

What is a Saturn Cloud job?

A Saturn Cloud job is a computing environment set up to run recurring tasks. You specify the size of the hardware to run the task on (including if it has a GPU or Dask cluster) as well as the code to run, typically through a git repo. You can then use the resource as part of your data science pipeline to have the task execute when you need it or on a schedule.

Using a Saturn Cloud job to run a notebook

One neat feature of jobs is that they can be triggered in many ways. When creating the job you can select to have the job repeat at a set frequency, like weekly. If you don’t give it a schedule, you can trigger the job by pressing the start button on the resource page of the job.

What’s really great is the API functionality of jobs. If you have a job set to not run on a fixed schedule, you can have it instead trigger every time an API endpoint is hit. I’ll walk through a quick example of having a notebook run after an API is called. I will be using an example notebook from the Saturn Cloud GitHub account that only has one code cell which logs that it’s running (you’d probably want to have more interesting things in the notebook):

import logging

logging.getLogger().setLevel(logging.INFO)

logging.info('Job is successfully running!')

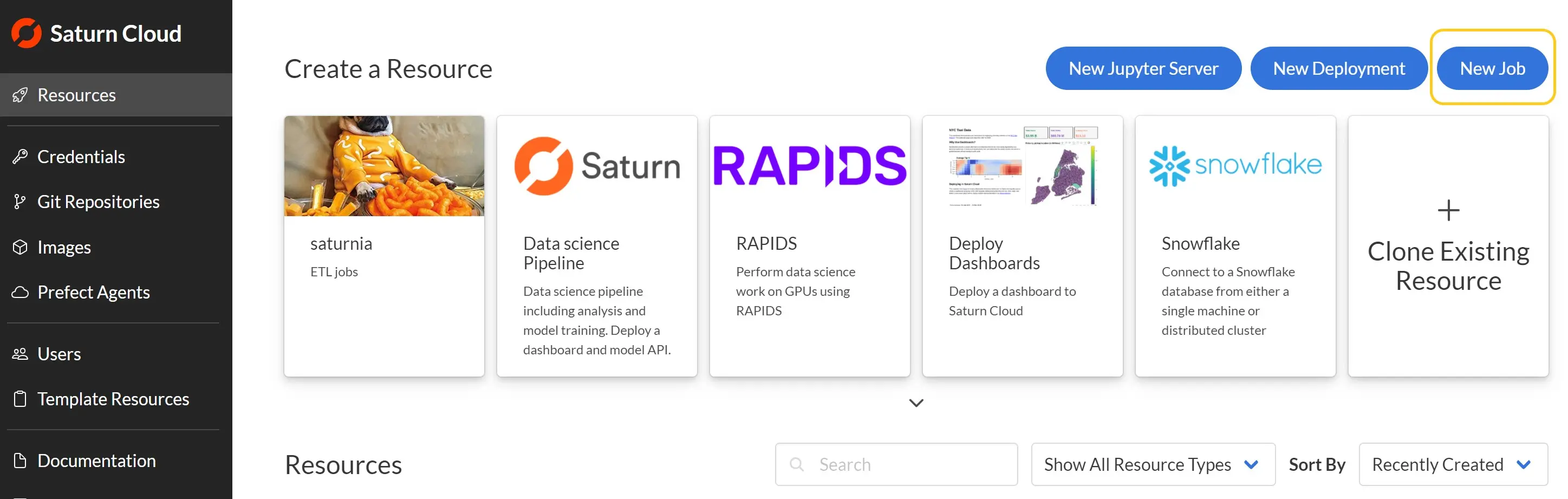

To create a job for this, first press the New Job button on the upper right corner of the Resources page. This will let you choose the settings for the notebook.

In the setup, you’ll want to choose the following settings:

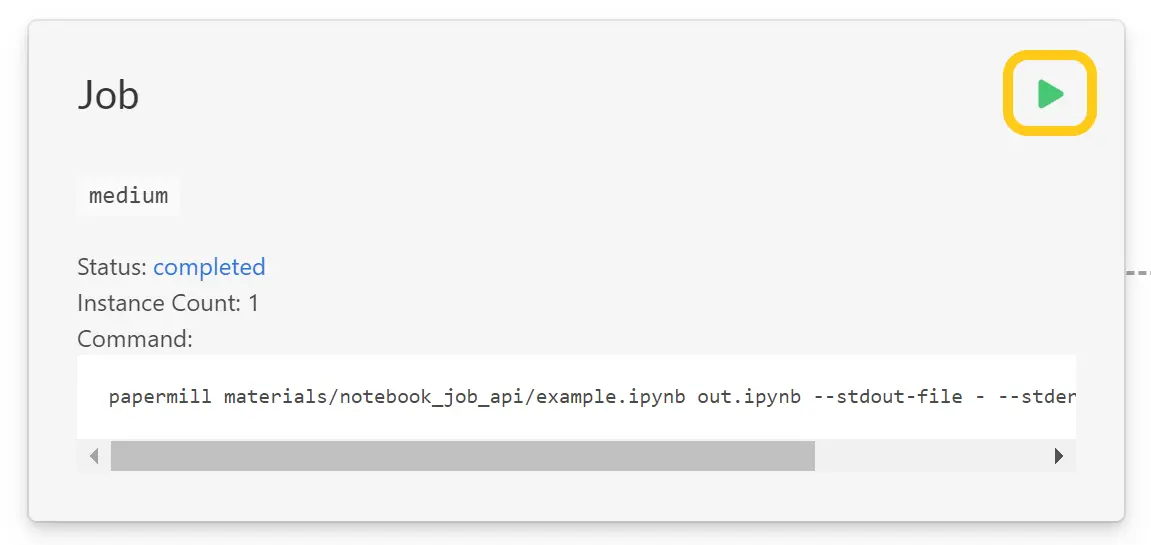

- Command -

papermill materials/notebook_job_api/example.ipynb out.ipynb --stdout-file - --stderr-file - --kernel python3. This command will use the Python package papermill to execute all the cells of a Jupyter notebook. It’s set up to use the example notebook from the Saturn Cloud GitHub repo (which we have to attach to the job in a later step). - Hardware & Size - Since this notebook is very small, select the smallest CPU resource you have available.

- Image - This example notebook doesn’t have any complex dependencies, so the base

saturncloud/saturnimage will do. - Extra Packages - Under extra packages in the Pip Install section, add the package

papermill. This will let us execute the Jupyter Notebook from the command line. - Run this job on a schedule - leave this unchecked. This option lets you run the job on a fixed repeating schedule, but since we want to use the job as an API we do not need it.

Once you’ve created the job, on the resource page for the job in the Git Repositories section, select New Git Repository and add git@github.com:saturncloud/materials.git. This is the

GitHub repo with the notebook so the job will run the correct code. By default a job will use the latest code from the default branch, but you can specify custom branches or commits.

With these steps complete you can now already run the job manually by pressing the green start button on the resource page of the job:

If the job successfully runs, the status will be Completed, which will give you a link to the logs showing any output from the notebook. Here you can see that the “Job is successfully running!” log we printed from the example notebook shows up in the logs:

Input Notebook: materials/notebook_job_api/example.ipynb

Output Notebook: out.ipynb

Executing: 0%| | 0/2 [00:00<?, ?cell/s]Executing notebook with kernel: python3

INFO:root:Job is successfully running!

Executing: 50%|█████ | 1/2 [00:00<00:00, 1.20cell/s]

Executing: 100%|██████████| 2/2 [00:00<00:00, 2.09cell/s]

If the job fails to complete you’ll see an error status which also gives the logs of what happened.

Running a Saturn Cloud job as an API

This job is already set up to run as an API, we just need to send an HTTP request to the appropriate URL. First, to ensure that the request is authenticated, you’ll need your Saturn Cloud user token, which you can get from the Settings page of Saturn Cloud. You will also need the Job ID of your job, which is the hexadecimal value in the URL of the job’s resource page: https://app.community.saturnenterprise.io/dash/resources/job/{job_id}.

From Python, you can then call the API and trigger the job using the code below. Note that any HTTP request sending system, like curl or Postman, would work fine too:

import requests

user_token = "youusertoken" # (don't save this directly in a file!)

job_id = "yourjobid"

url = f'https://app.community.saturnenterprise.io/api/jobs/{job_id}/start'

headers={"Authorization": f"token {user_token}"}

r = requests.post(url, headers=headers)

And when you run that code you will cause your job resource to start running!

So with Saturn Cloud, you can easily take a notebook you have been working on and set it to run programmatically at any time with an API request–above and beyond the more standard methods of manually running the job or having it automated on a schedule. This is a great way get the value of productionizing code as part of a data science workflow without having to go through the burden of coding up an entire web API to support it. If you want to try Saturn Cloud yourself, our online hosted version will let you get started with access to instances with a GPU as well as a Dask cluster.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.