Easily Build LLMs With Saturn Cloud

LLMs are creating ripples in today’s digital landscape due to their immense capabilities, from enhancing customer interactions and bridging language barriers to producing creative content and facilitating adaptive learning. However, they also come with their fair share of complexities. Given the sheer size of these models, working with these models is demanding, often exceeding standard workstations' capabilities.

At Saturn Cloud, we’re providing every data science professional with the tools required to harness the potential of LLMs. Our platform offers a robust, scalable, cloud-based environment that supports complex LLMs, alleviating common challenges tied to LLMs — model training, retraining, finetuning, deployment, and, most importantly, the need for extensive computational resources.

We’ll introduce you to Saturn Cloud’s suite of LLM demos – simple, actionable guides designed to help you leverage these advanced AI tools in your projects.

Getting Started with Saturn Cloud

Before getting started, make sure you have a Saturn Cloud account. Users can launch all of our current LLM demos within the platform. For more pricing information, click here.

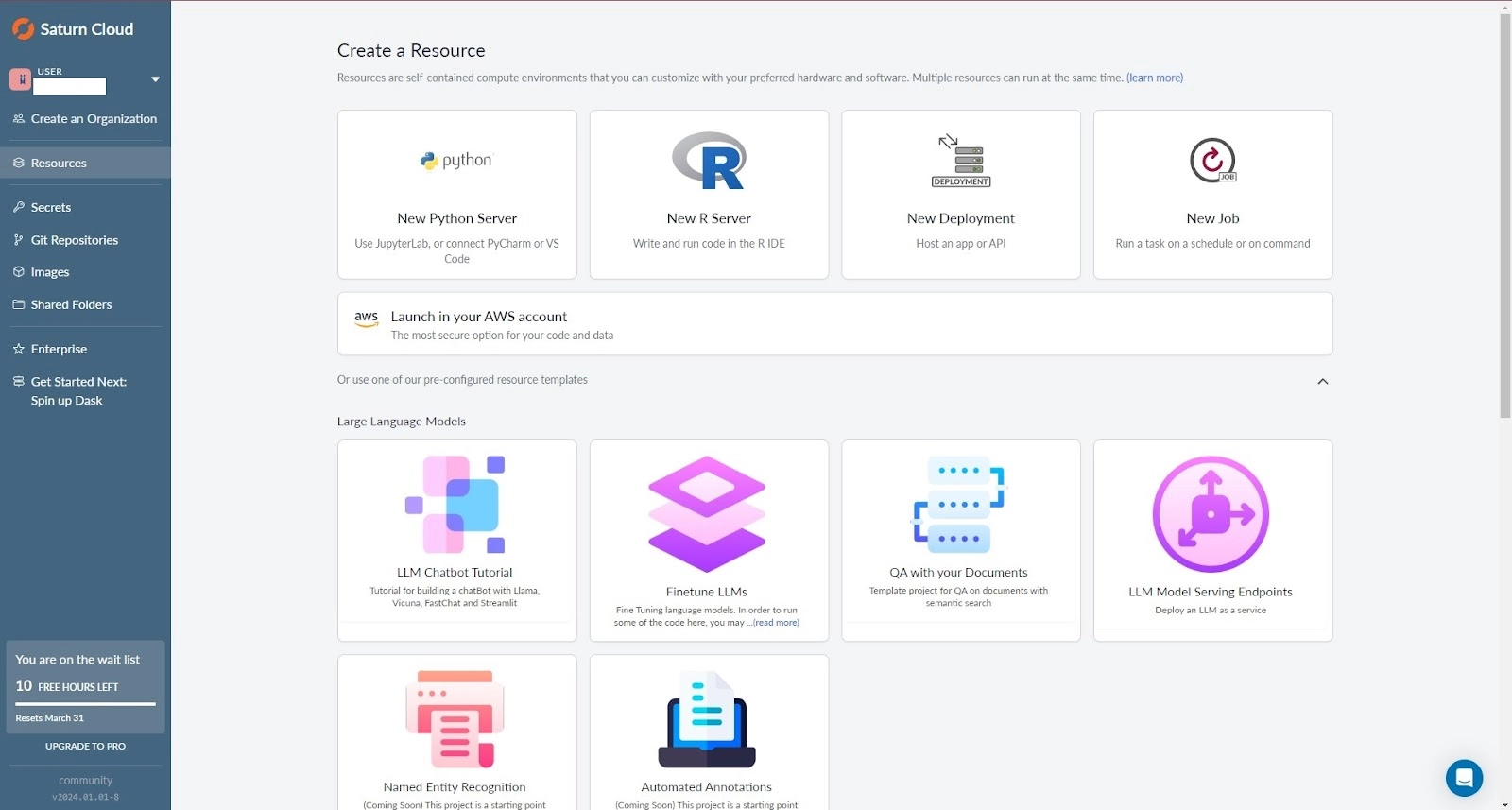

To sign up for Saturn Cloud, visit saturncloud.io. After registration, you’ll be greeted with a dashboard.

[Optional] Add an SSH Key for SSH & VS Code Access

Additionally, you can add an SSH key to your Saturn Cloud account to go through the demos from a VS Code session from your local machine. VS Code will allow you to access applications launched by Streamlit to be accessible through localhost.

To add an SSH key, click here. It is also important to note that when your demo instance is running, you must take note of enabling SSH connections in the “Enable SSH for a Jupyter server or R server resource.”

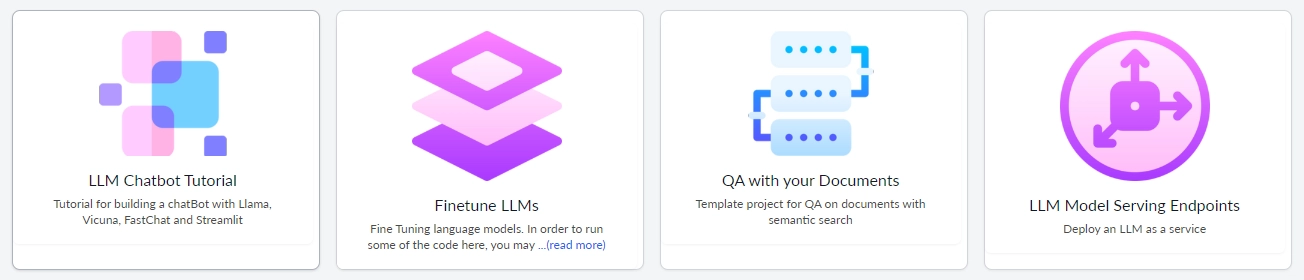

LLM Demos

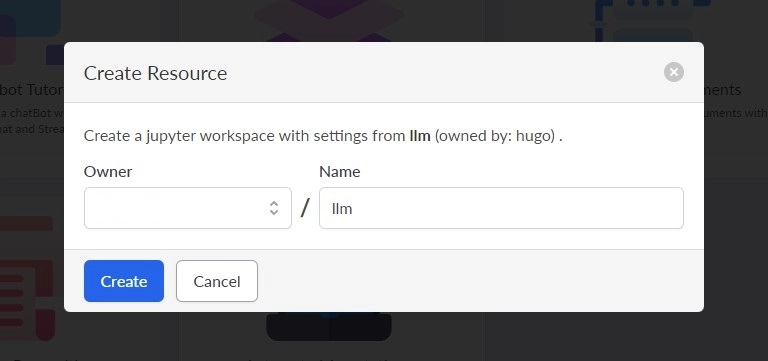

All of our demos come with easy one-click deployment. Click on any of the demos and click “Create” to get started. Note that if you have any demos that you have spawned before under the free tier, you may have to delete those resources to launch other demos due to disk space limitations.

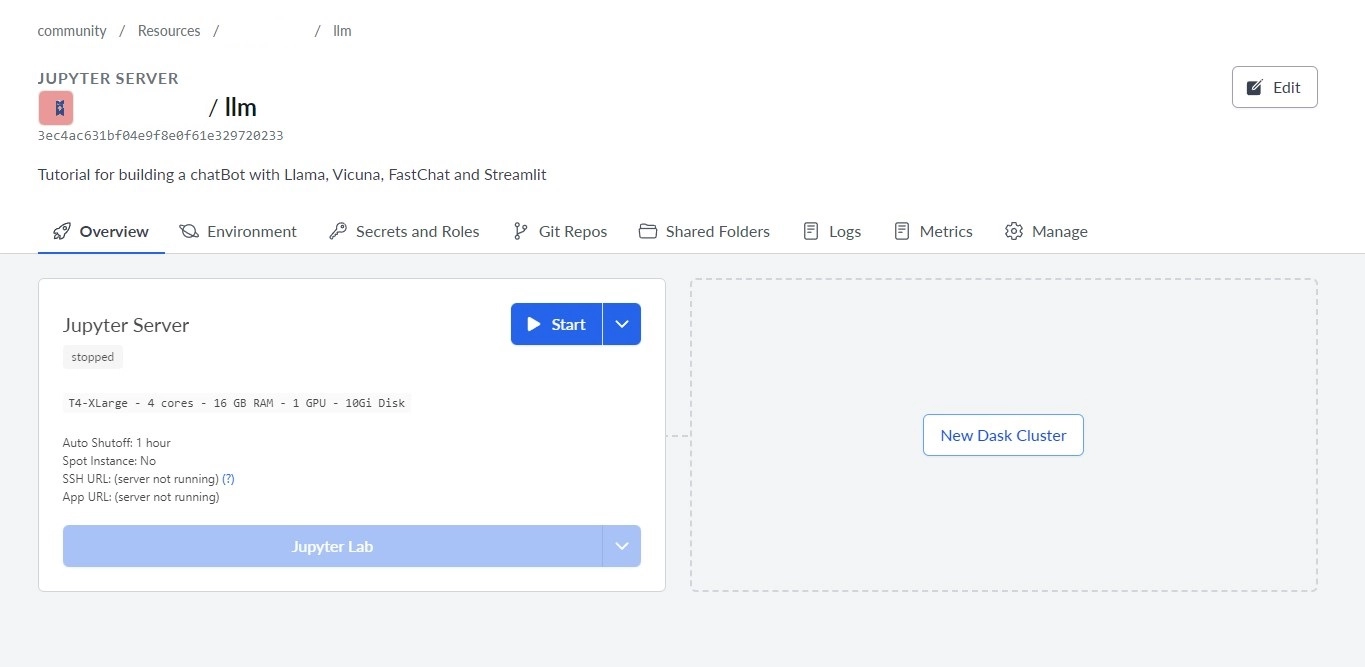

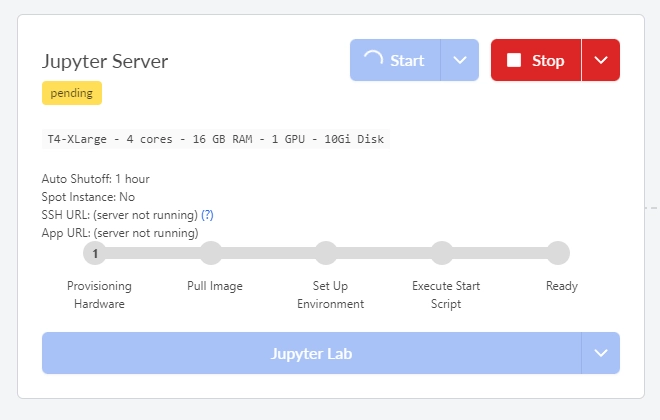

You will then see a resource page and a stopped Jupyter server. Remember to enable SSH connections by clicking “Edit” on the top right and enabling SSH connections at the very bottom of the settings section under “Additional Features” (as mentioned in the previous section above). Options are also available to select your hardware, disk space, and instance size. Once you have finished configuring your resources, click “Save.”

Once you’ve clicked save, you should be brought back to your Jupyter Server resource page. Click start on the server and allow around 10-15 minutes for the server to provision. Once provisioning is complete, click the “Jupyter Lab” button or use the SSH URL in an SSH or VS Code session to access your instance.

An SSH URL and App URL are provided once provisioning has finished. Users can also connect to the Jupyter Lab instance by clicking the blue Jupyter Lab button to access the Jupyter server on the web page.

LLM Chatbot Tutorial

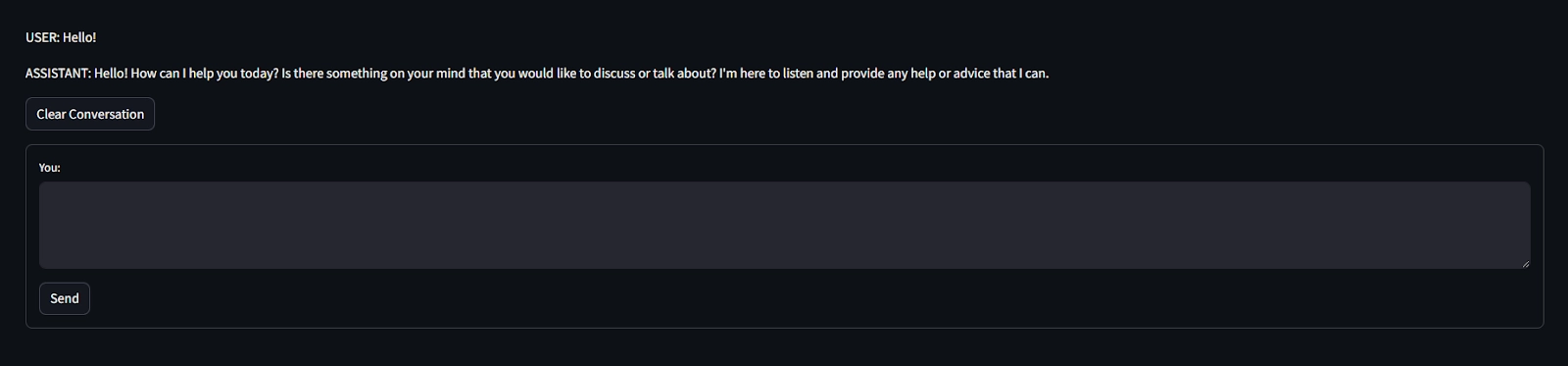

For our LLM Chatbot Tutorial, we showcase the ease of deploying a simple chatbot to be used live in minutes. Launching this chat can be done either through localhost through VS Code or by simply launching the application on Saturn Cloud and accessing the app via the “App URL” from the screenshot above.

To launch the application, enter this command into a terminal session: streamlit run streamlit/chat.py.

This chatbot tutorial’s value lies in its seamless development process – it’s almost effortless. It is invaluable for data scientists who want to focus more on refining the chatbot model and less on intricate deployment details to view an interactive app. Using a Jupyter instance for model training and leveraging Saturn Cloud for immediate deployment allows data scientists to access their applications on a local machine while using scalable cloud resources.

Finetune LLMs

Pre-trained LLMs are engineered to grasp a broad understanding of language, serving versatile applications. However, specificity is often crucial for more specialized tasks, where finetuning becomes essential.

Let’s consider our previous “LLM Chatbot Tutorial.” It adeptly handles diverse conversations, yet imagine a scenario where the chatbot is used as an art museum guide. It should recognize specific art terms, comprehend different art styles, and even understand the museum’s layout. Such a level of specificity isn’t inherent to the pre-trained model, but through finetuning on relevant datasets, we can enhance its capabilities. That’s precisely what our “Finetune LLMs” demo will guide you through — refining an LLM to meet specialized needs for a more nuanced and valuable prompt response.

The general workflow for finetuning LLMs with the Saturn Cloud LLM Framework is as follows:

Creating a Hugging Face Dataset in the correct format.

You run the

dataprep.pyscript, which turns your input data into text-based prompts and data used in training, input IDs, labels, and the attention mask.Run the

finetune.pyscript to fine-tune the model.

All of our code is sectioned off into the following main components:

llm: contains all the “library” code to facilitate LLM applications and functional tasks.build_exmaples: contains scripts used to prepare data used inexamples. Users are not expected to use this directory.starting_points: contains code templates you can implement to apply this repository to your own data.Examples: contains examples of using the framework on sample datasets. You can think of examples like the code instarting_pointsimplemented for specific datasets.

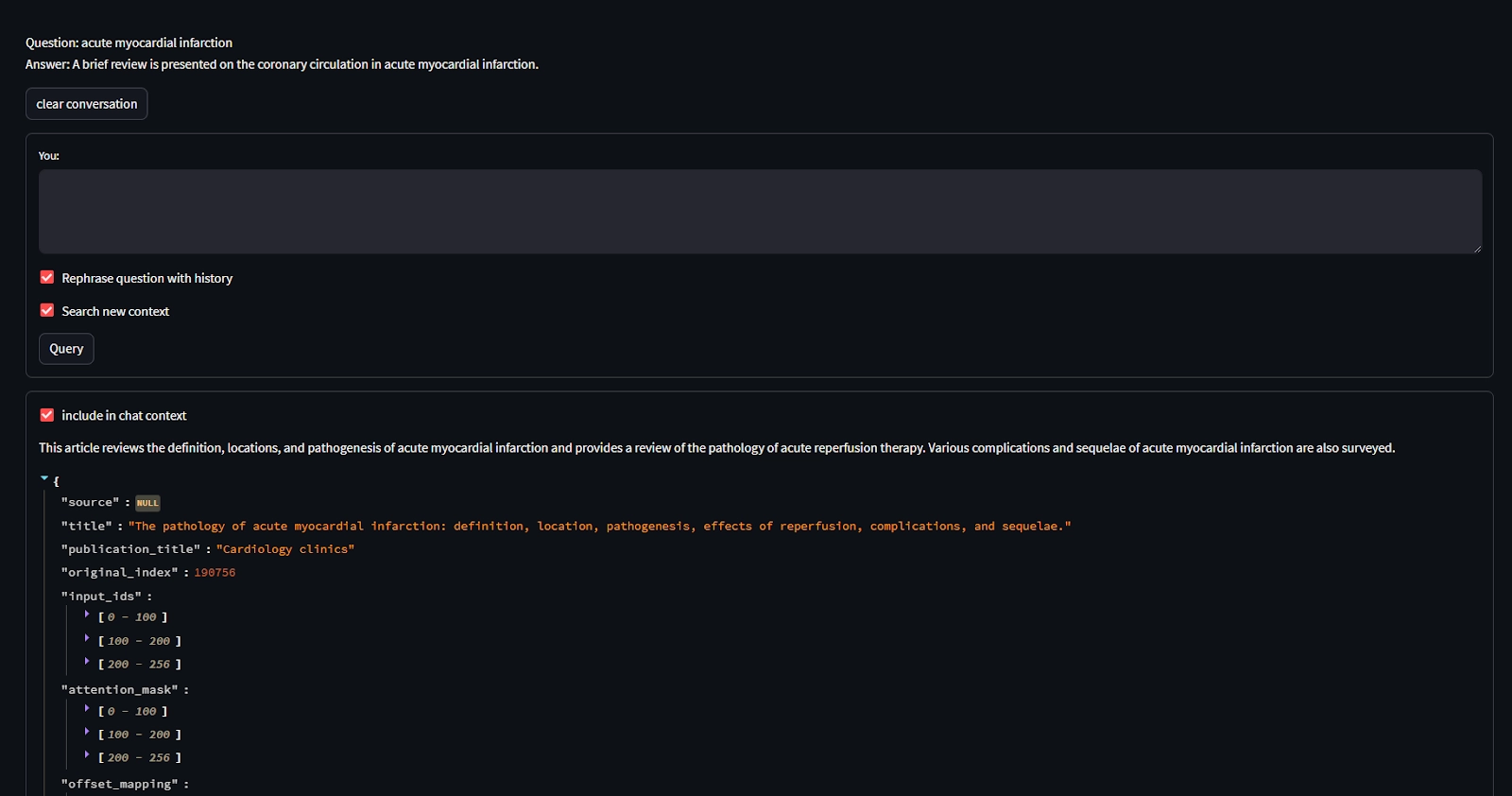

QA with Your Documents

Harnessing the power of LLMs isn’t just about conversational chatbots and nuanced interactions; one of the strategic ways to leverage these models is for navigating and extracting information from documents.

In many professional contexts, sifting through documents can be time-consuming and laborious. Whether it’s ensuring compliance by referencing a 200-page regulatory document, finding specific details from an extensive report, or answering a client’s query by referring to a lengthy contract, time is of the essence.

That’s where our “QA with Your Documents” demo can help with referencing documents. You can instantly access a wealth of information by training an LLM to understand and navigate your document base. This demo will help you turn overwhelming stacks of documents into a manageable and efficient Q&A system.

To get started:

Once you enter into the Jupyter instance, open a terminal instance. Simply type

maketo begin the environment installation process.Once your Conda environment finishes installing, activate the environment:

conda activate bert-qa.Download a document. Here is an example:

python3 llm/qa/cli/main.py pubmedhttps://pubmed.ncbi.nlm.nih.gov/38447573/Launch the Streamlit application:

streamlit run llm/qa/streamlit/app.py.View your application via the App URL or

localhostif you’re connected via VS Code.

LLM Model Serving Endpoints

Deploying AI models encompasses more than training and fine-tuning models; it requires setting up reliable, robust, and quick-response endpoints to serve them. This necessity is what the “LLM Model Serving Endpoints” demo addresses. Its main goal is to facilitate secure, dependable, and seamless real-time interactions with your trained model without the complexity of setting up networking to be used publicly. Users who are demoing this tutorial can open the demo to view the model serving in a development or production environment.

Conclusion

Saturn Cloud can help remove the complexities tied to LLMs' size and computational demands, providing a scalable environment without sacrificing ease of use for data scientists. With its cloud-based environment, you can work beyond the limits of standard workstations without worrying about infrastructure details. Doing so lets you concentrate on what really matters: turning your data into valuable insights.

Saturn Cloud’s suite of LLM demos offers a step-by-step introduction to get your entire environment provisioned with a single click of a button. With Saturn Cloud, navigating the landscape of LLMs and collaborating on projects with your team has never been more straightforward and streamlined. So embark on this journey with Saturn Cloud and unlock the full potential of LLMs. Happy modeling!

About Saturn Cloud

Saturn Cloud is a portable AI platform that installs securely in any cloud account. Build, deploy, scale and collaborate on AI/ML workloads-no long term contracts, no vendor lock-in.

Saturn Cloud provides customizable, ready-to-use cloud environments

for collaborative data teams.

Try Saturn Cloud and join thousands of users moving to the cloud without having to switch tools.